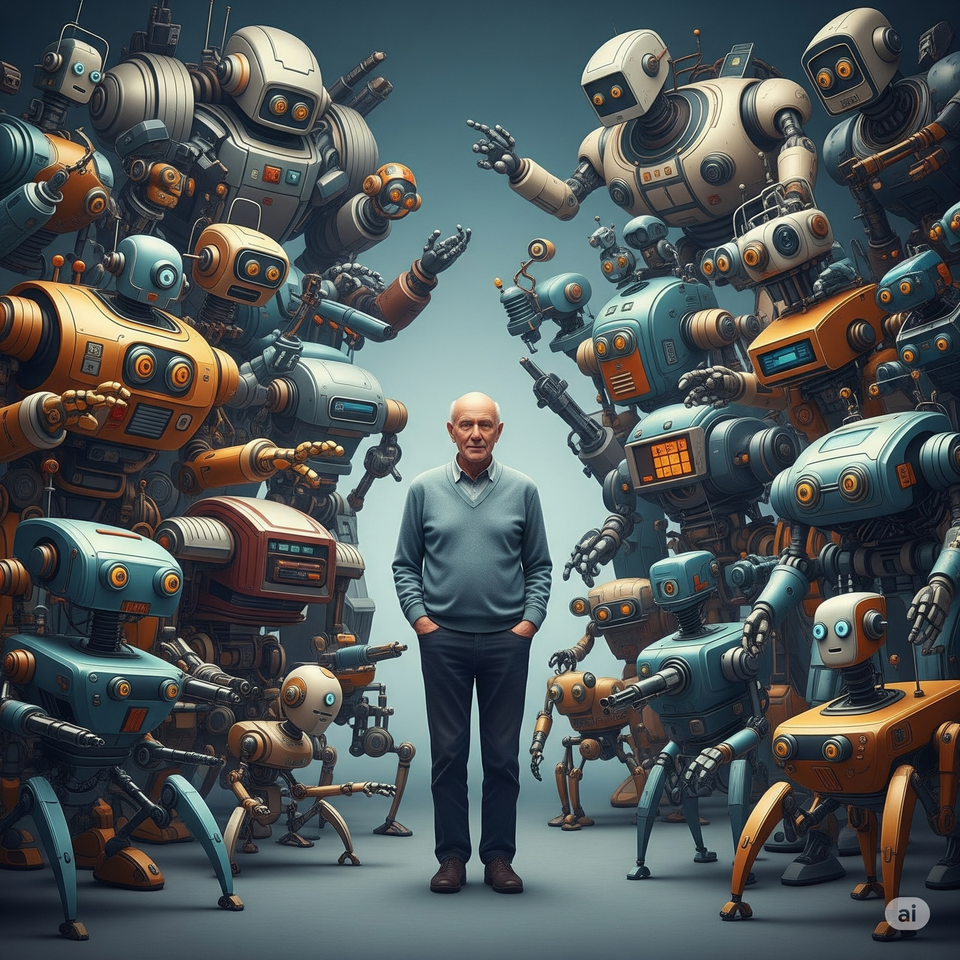

I am awash in robots!

As you know if you read this blog, I am a retired software engineer. I have never lost my love of all things that move autonomously, in whatever form they may take, be it drone, humanoid, or hexapod. So in the last few months, I have acquired quite a managery, legged, wheeled, treaded, small and large, some ready to run, and others that must be painstakingly 3D printed and assembled. I have also increased my capacity for 3D printing with the latest 3D printer models, but that is a topic for another time.

My first objective has been to acquire the fundamentals of Reinforcement Learning (RL) and study the amazing impact it has had on the control of higher-level behaviors for mobile robots of all kinds. Thankfully the online curriculum is fabulous and inexpensive and the open source repos are replete with example implementations. At no other point in my life have I had everything I need: robots, computers, research, training, and most of all, the time to put them all to good use.

So what does a retired software engineer with aspirations in the world of robotics software do once he gets everything he ever wanted? Imagine a child instructed to choose one toy in the entire store, who has just been given permission to take all of the toys home. Which toys should he spend the most time with? Which toys will yield the most fulfilling learning experience? Ah, leave it to us humans to find a way to transform an island paradise into an island quandary.

I think the first project will be to contribute to the most basic of algorithms which is the first target of almost anyone wanting to use a mobile robot in an unknown environment, localization. Most of today's localization algorithms are hard to use, require calibration, and lots of integration. The "Intel RealSense T265" in contrast was a single sensor that generated global coordinates from a starting position and greatly simplified localization. No software stack was required, no external compute resources, and no calibration. It just worked! Sadly, it was canceled shortly after Intel abandoned the RealSense product line.

With the availability of low-cost edge AI devices, it should be possible to resurrect this use case in the form of a container image (e.g. docker or podman image) specifically configured to run on a particular development board. It would provide coordinate data over a USB port and exist in a 3D-printed enclosure of some kind. It would work only in indoor environments and would support traversal at high speed over varied terrain. Like the T265, its primary function would be to provide highly accurate coordinate data, exposing raw point cloud data only on demand, and function as a black-box.

I suspect that I will be able to leverage much of the work already done in this domain, integrating deep learning networks with existing localization stacks such as ORB-SLAM3. In the meantime, I am awash in robots, all eager for my attention, each promising a different kind of mobility. Who wants to be first!?

Comments ()